Brian Winston

PhD Student in Psychological and Brain Sciences

Johns Hopkins University

About

Hey. I’m Brian. Welcome to my website. I am a PhD student in the Center for Psychedelic and Consciousness Research and Department of Psychological and Brain Sciences trying to figure out how psychedelics change the mind and brain. I’ll let you know when I do that. In the meantime, you can check out my publications, posters, talks, sci-comm articles, and more. Don’t hesitate to get in touch!

- Psychedelics

- Cognitive Neuroscience

- Aging and Longevity

PhD in Psychological and Brain Sciences, 2022-present

Johns Hopkins University

B.A. in Philosophy, Neuroscience, and Psychology, 2019

Washington University in St. Louis

Research

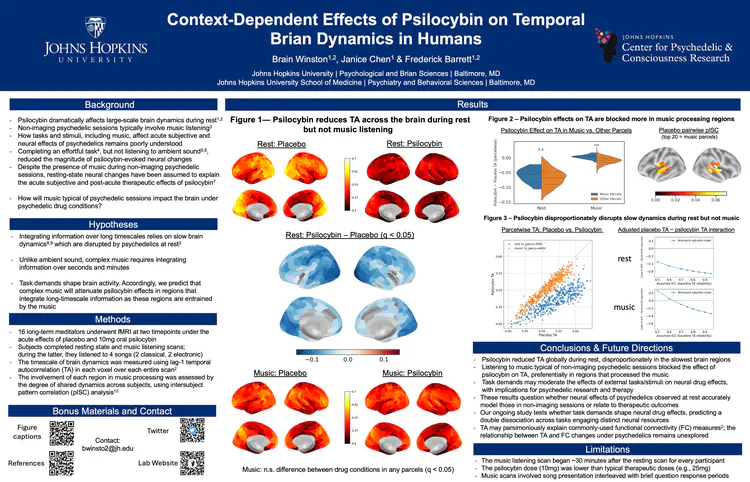

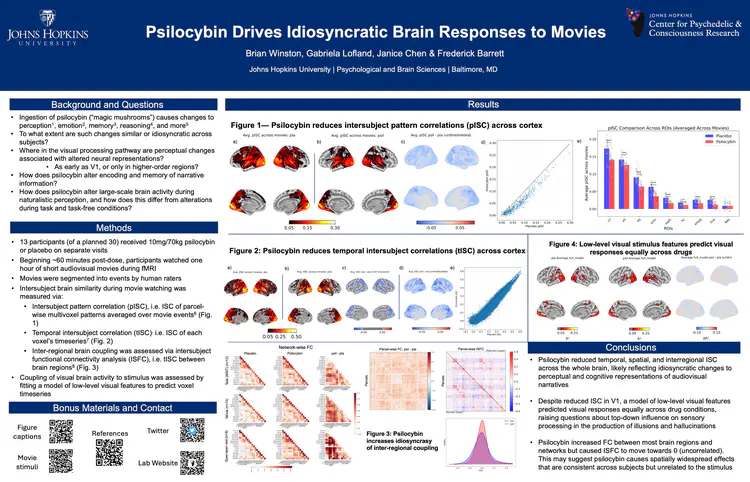

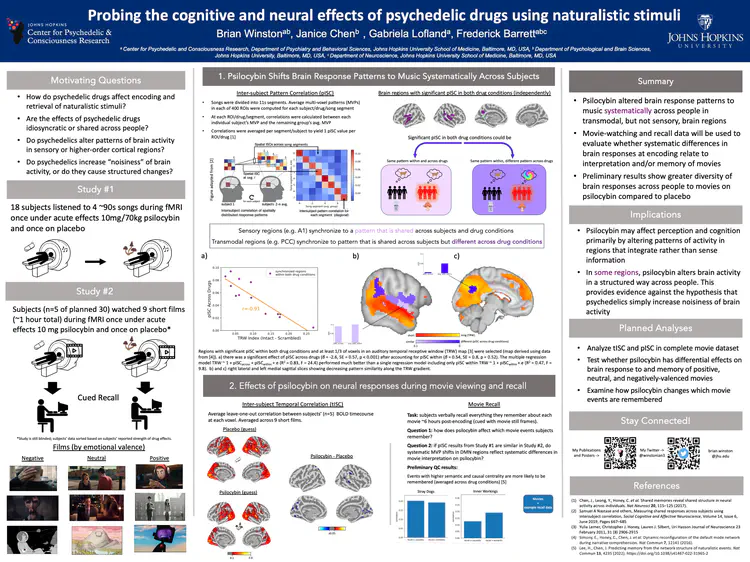

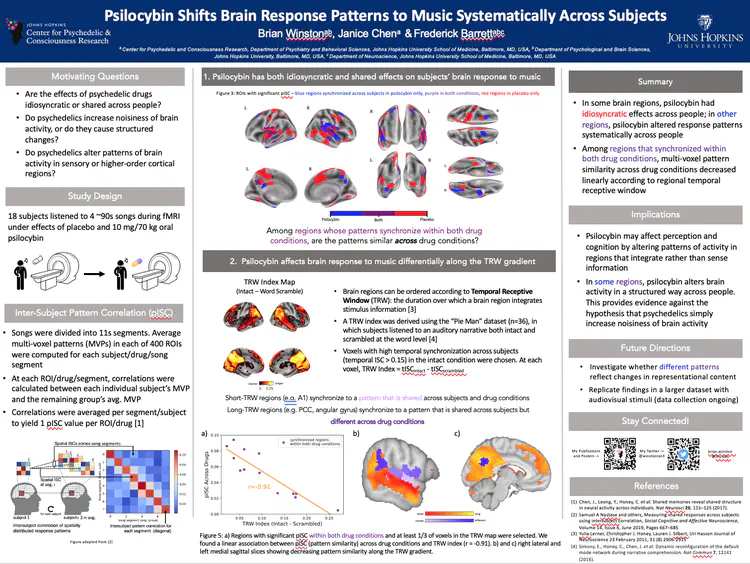

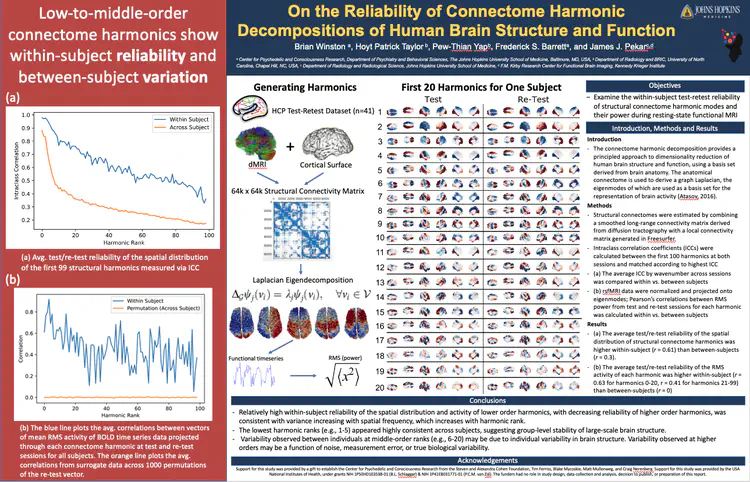

Using Naturalistic Stimuli to Probe the Cognitive and Neural Effects of Psychedelics

How do psychedelics affect the mind and brain in real-world scenarios?

To date, most psychedelic neuroimaging studies have administered drugs to people lying down with their eyes closed. Using these data, the field has built theories and models for how psychedelics alter thoughts, behavior, and brain activity with the assumption that these models will generalize across contexts. During most of waking life, however, people have their eyes open, they process information, interact with other people, and solve problems. Anecdotally, psychedelic effects are different in these states, but the field has not yet characterized how. I record people’s brain activity while they watch movies. Movies simulate many features of real life such as movement through space, social interaction, emotional changes, and narrative structure. These data allow us to probe how psychedelics modulate perception, emotional responses, memory, causal judgment, and much more.

Movie Stimuli Used in Winston et. al, 2026 (in prep):

Example recall data

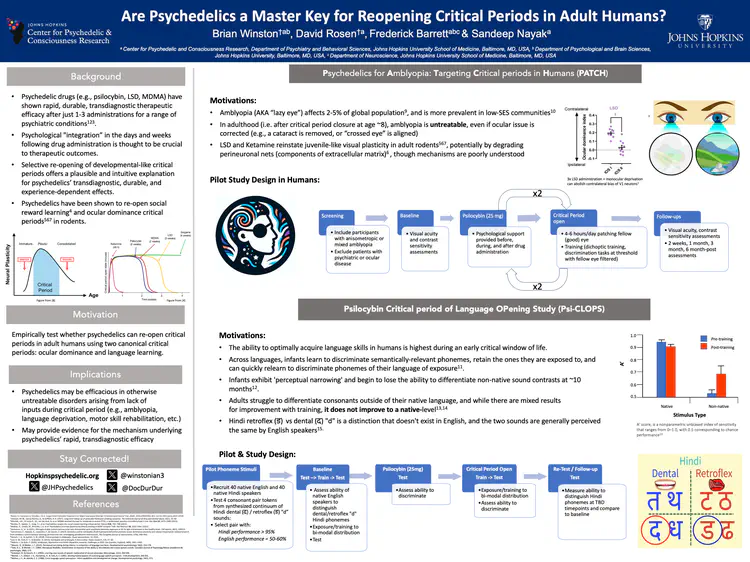

Reopening Critical Periods with Psychedelics

PATCH and Psi-CLOPS studies:

An intriguing hypothesis is that psychedelics enable flexible and durable behavioral changes by transiently increasing the malleability of neural circuits. I received a 2025 Discovery Award to conduct the first-ever human study that will measure the effects of psychedelics on neuroplasticity: PATCH - Psilocybin for Amblyopia, Targeting Critical periods in Humans. Stay tuned for more!

Science Communication

Europe Trip

Hiya Kati,

I just wanted to write you that I arrived home safe and sound! It was an amazing trip I had in Europe — so good, in fact, that I am sad to return to the USA. More than anything, I find European people more down-to-earth than Americans.

As you may know, I didn’t stay in hotels but rather youth hostels. Most of the places I stayed housed about ~8 people per room. The biggest room I stayed in was in Barcelona, which had 24 beds! Surprisingly I rarely had any issues or discomforts in this arrangement. People either mind their own business or are friendly. I made quite a few friends just because they happened to be in the bunk across from me, or they arrived at the same time as me. Of course, people return to the room at all hours of the night. Some people snore, others toss-and-turn. But I had a wonderful set of earplugs that I wore every night that blocked out all the noise. In fact, one night when I was in Budapest, some traffic accident apparently happened right outside of our (open) window. There were police cars, ambulances, tons of commotion, yet I was completely oblivious and only heard about it in the morning. As I said: I had a pair of amazing earplugs that blocked out all the noise.

I would love to tell you about the trip, but it’s hard to organize my thoughts. Maybe I will go place by place chronologically, and write a few thoughts about each. Feel free to skip whatever you’re not interested in.

UK:

I started the trip in London. The occasion: a conference on MRI (Magnetic Resonance Imaging). The research I’ve been working on involves analyzing images of the brain acquired by MRI, so the conference wanted me to present this work. Overall, it was a pretty boring few days at the conference since I didn’t know anybody. I made a few friends on my last night, some folks from Cardiff University in Wales, but overall it was a pretty lackluster and lonely experience. My presentation did go okay, and there were a couple hundred people in the audience. That said, I didn’t really care that much about the research I was presenting, so again, it wasn’t the most meaningful experience. It’ll make a nice line on the resume, at least!

Outside of the conference, I did do some sightseeing. However, I made the questionable decision of booking my AirBnB in a neighborhood called “East Ham.” I chose this location since it was close to the conference venue; however, what I didn’t know at the time is that East Ham is a pretty seedy part of town. In fact, it reminded me a lot of Baltimore, which is not what I was expecting when coming to London. It didn’t help that for three of the days I was there, the tube (metro) by my AirBnB was closed for repairs, so I was pretty much stuck in East Ham. Not an ideal situation! At least I got to sample the authentic local cuisine (seen below).

That said, my time in London was not entirely negative. I appreciated how cultured and diverse it is. The city parks are numerous and spectacular. Overall, I appreciated the politeness of not only people but also the signage. The most obvious example is the voice telling you to “Mind the Gap” as you exit the tube. But that’s just one of many tips the city gives you. When you get off the tube there’s always a sign that indicates the “Way out,” and when you cross the road, signs remind you to “Look left” or “Look right,” which is especially useful for dumb tourists like me who get confused which way the cars are going in the UK. In the U.S., on the other hand, it feels like an “every man for himself” world. Nobody tells you to mind the gap: if you fall in, that’s your problem.

MORE TO COME!!

It’s Time for Psychedelic Neuroscience to Face its DMNs*

About 95% of the time I read a popular science article explaining the “neuroscience of psychedelic drugs,” I cringe. Not because the topic is dull — just the contrary: I find it so interesting that I got a job studying it full-time. I cringe because the prevailing narrative on how psychedelics alter brain function is flawed, and it’s leading a lot of smart people interested in the research astray.

I know this because I used to be one of those people. An undergrad neuroscience major and self-proclaimed consciousness nerd, I couldn’t get enough of the “psychedelic renaissance.” I devoured Michael Pollan’s How to Change Your Mind and burned through episodes of Netflix’s The Mind, Explained. I came away mesmerized by the potential for psychedelic treatment. But I also inherited an idea about how psychedelics work in the brain. An idea, I’m realizing — after two years of conducting research at the world’s largest psychedelic research center — that is not grounded in fact.

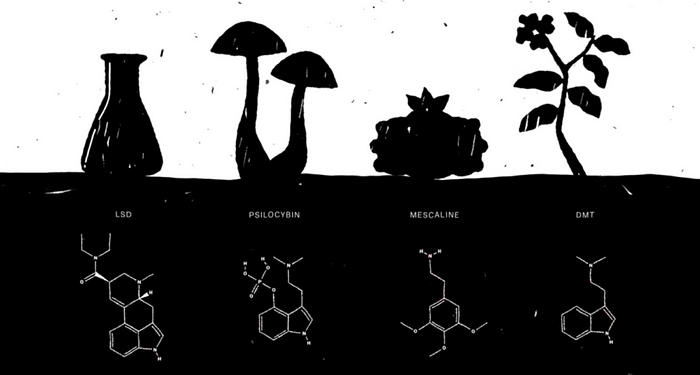

I’ll call this idea — which circulates in book chapters, TV episodes, webinars, and even academic papers — the “popular account” of psychedelic action. It begins like this. Psilocybin, the chemical behind the magic in “magic mushrooms,” binds to a specific type of serotonin receptor. This binding causes a variety of network-level changes in the brain that eventually lead to a feeling of “ego-dissolution.” Before going any further, let’s define some terms (for more info, see my article on fMRI).

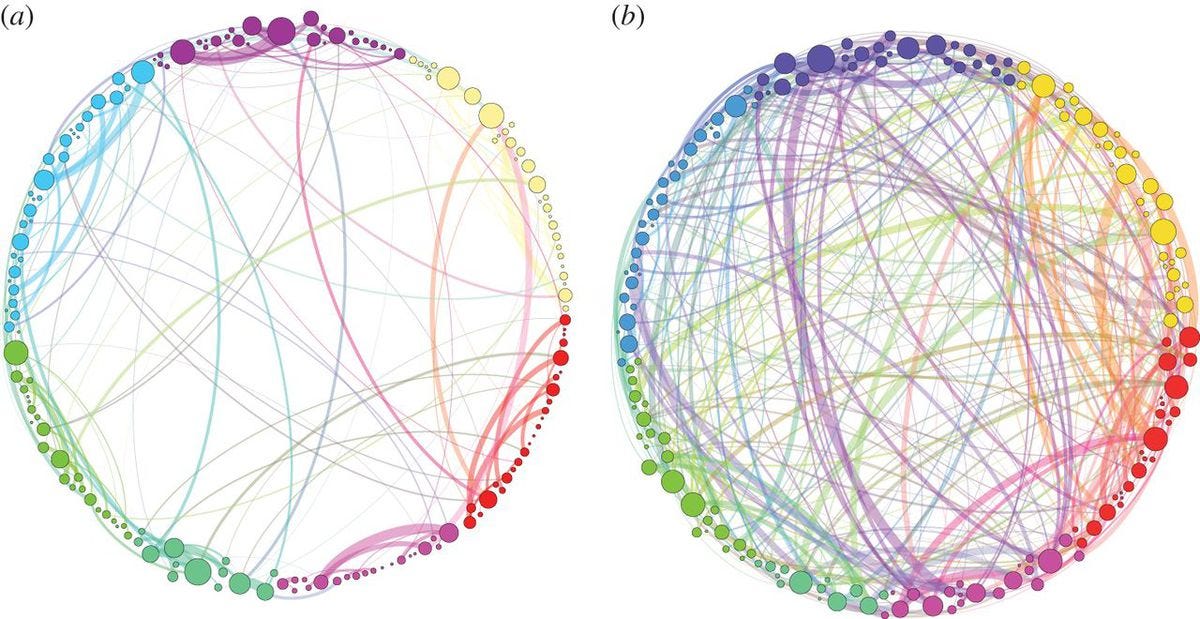

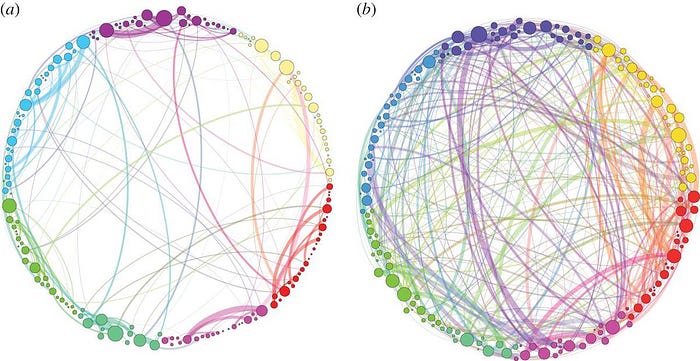

Brain networks are sets of neural regions that fire together when a person engages in a particular activity. The auditory network, for example, comprises a handful of areas along the right and left sides of the brain. When people listen to music — or any sounds — in an MRI scanner, these areas light up. When all of the regions in a particular network are firing together in synchrony, we’d say the network is highly connected. So we’d expect the auditory network to be more connected when a person is listening to Beethoven’s 5th, for example, than when they are lying in silence.

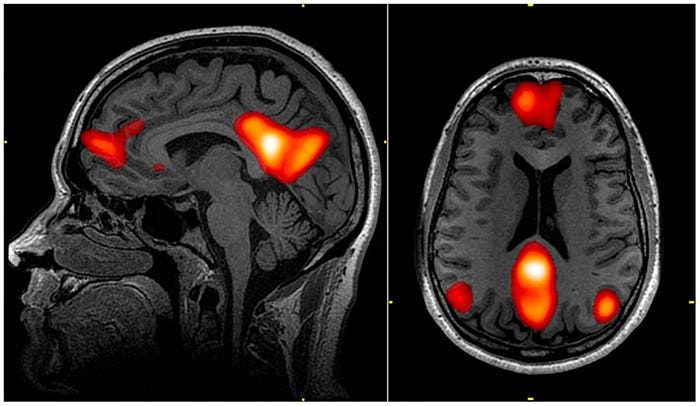

The “popular account” of psychedelic action hinges on one finding: psychedelic drugs cause a decrease in connectivity of a peculiar network called the Default Mode Network (DMN). In my first example, I explained that the auditory network is most connected while someone listens to sounds. And for virtually all brain networks, there is some activity during which the network is most connected. That is, except the DMN. The DMN is most strongly connected when a person engages in no activity (hence the name — the brain’s “default mode.”)

But the human mind is a tireless beast, and even when people are “doing nothing” in an MRI scanner, they’re still doing something — mind wandering or reflecting about themselves, usually. For this reason, the DMN is said to be highly connected during “self-referential thinking.”

So what does it mean for this network to become less connected, or — in neuroimaging terms — to disintegrate? After taking a psychedelic, some people report feeling “ego-dissolution”: a transient shift — or in some cases, obliteration — of their normal sense of self or identity. Psychedelic pundits sometimes contend that disintegration of the DMN is the biological basis for this feeling. “To the extent the ego can be said to have a location in the brain,” says Michael Pollan on Big Think, “it appears to be this, the Default Mode Network.”

It’s an elegant story, one that satisfyingly explains how psychedelics exert their therapeutic effects. The network in our brain that represents our identities is temporarily subdued, letting the brain go “off [its] leash,” as Pollan puts it. People with substance abuse disorders no longer see themselves as “addicts,” and people with depression no longer feel their identity bound to their illness.

Unfortunately, the “popular account” is based on faulty logic. To help understand why, consider this thought experiment (for the record, I still revere Michael Pollan).

Suppose I slide 100 people into an MRI scanner (not all at the same time) and image their brains while they watch clips of the World Cup. After analyzing the data, I observe a network of brain regions that reliably fire together while people watch the clips. Excited, I run another experiment where I show people different soccer clips — children kicking a ball around, reels from the video game FIFA — and the network is still highly connected. Did I find the “soccer network”?

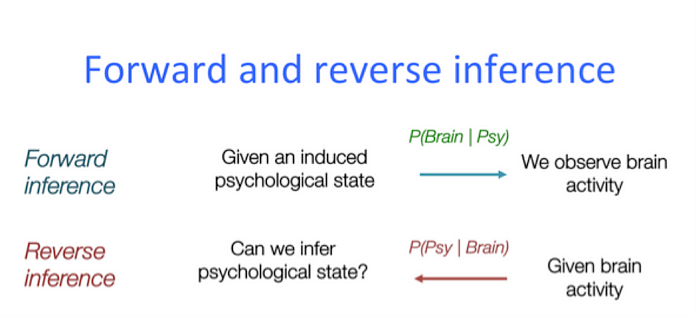

Probably not. First off, everyone in the experiment was looking at people perform actions with their feet, so maybe I’ve found the “people-performing-actions-with-their-feet network.” Or, on a more basic level, everyone in the experiment had their eyes open, so likely I was observing a visual network. The point is, my findings were wholly unspecific to soccer; I’ve fallen prey to what neuroimagers call the fallacy of reverse inference.

A reverse inference is any claim that links brain data with mental states. To claim that the network we identified is the “soccer network” makes a specific prediction: if I observe someone’s brain activity, and that network lights up, then the person must be thinking about soccer. So if I instead showed people baseball clips and the network was active, we’d know that it’s not specific to soccer. Since most brain networks are involved in a myriad of mental states, valid reverse inferences are notoriously difficult to make.

Returning to psychedelics, to claim that the DMN is the neural seat of the ego — and that its disintegration represents ego loss — is an ambitious reverse inference. To prove its validity, a researcher would have to demonstrate that disintegration of the DMN and ego dissolution correlate 1:1. In other words, if a subject’s MRI scan showed a disintegration of the DMN, then that subject must have been experiencing ego dissolution.

Empirically, this couldn’t be further from the truth. It turns out that disintegration of the DMN isn’t specific to “ego-dissolving” drugs like psilocybin. It’s not even specific to hallucinogens. Among the many drugs and activities that acutely decrease connectivity of the DMN, perhaps most puzzling is alcohol. I say this because my ego does the opposite of dissolve after a pint of Blue Moon.

To link DMN disintegration to a mental state as particular as ego-dissolution is to conveniently ignore years of non-psychedelic research findings. A more likely explanation is that disintegration of the DMN is a neural correlate of being messed up on any drug, but even this may be overly-specific.

When discussing the neuroscience of psychedelics, it’s important to simplify research findings for a lay-audience, and even a certain degree of speculation is warranted in the appropriate context. But propagating an empirical inaccuracy as “our best guess” for how psychedelics work in the brain is a disservice to both the neuroscientists doing serious work on this topic, and to the curious public looking for good information.

In closing, I’ll link an alternate account of psychedelic action in the brain that goes beyond faulty reverse inferences involving the DMN. Franz Vollenweider, Katrin Preller, and the team at the University of Zurich have proposed a model of psychedelic activity that implicates the thalamus, a region involved in filtering sensory information. Psychedelics impair function of the thalamus, they suggest, which allows lights, sounds, and even thoughts that normally get “filtered out” to reach conscious awareness.

fMRI is the hottest technology in neuroimaging research. Here’s an ELI5.

What you need to know about the method that’s revolutionizing our understanding of the brain

In 2011, a group of neuroscientists at Berkeley scanned people with fMRI while they watched movie trailers. Using just signals from the brain, the researchers configured a set of machine learning algorithms to reconstruct estimates of the trailers that people had seen. Are the clips perfect? No. But the sci-fi-level potential is there.

From medicine to research, fMRI is providing us scientists an unprecedented level of detail in deciphering the complex code by which the brain operates. In this article, I’ll explain the basics of what fMRI is and how it works (in case you’re wondering if we can turn your dreams into movies…well, not yet, but maybe soon.)

MRI (Magnetic Resonance Imaging) is used in both research and medical practice to take 3D “images” of the inside of the body. These images can reveal the structure of organs, bones, ligaments, nerves, and other types of tissue, making them powerful diagnostic tools.

But traditional MRI has its limitations: an MR image is static — like a photograph, it captures its subject (the body) at a fixed moment in time. This isn’t a problem in most cases: if a radiologist wants to diagnose a tumor in a patient’s knee, for example, one image is all she needs to locate it. Other physiological functions, however, inherently unfold over time: the heart’s beat, the lungs’ inhale. Mapping these types of processes requires 4D images — that is, a sort of MR movie rather than one static image.

The brain is one such dynamic organ. At any moment in time, millions of neurons fire in complex patterns that — when taken together — not only control our organs and limbs, but also produce our conscious, sensory experience of the world.

To try and comprehend this process, researchers at Mass General Hospital in the early 1990s developed what is now the gold-standard technique in neuroscience research: fMRI. At the time, everyone understood that traditional MRI — often called “structural MRI” — is useful in physically locating tissue in the brain; however, it provides little help in mapping its real-time processes that fluctuate over time. In any case, neural firing — carried out via electrical signaling — doesn’t show up in brain scans.

The pioneers of fMRI figured out that they could rapidly take a series of MR images of the brain and track changes in blood flow over time. Crucial to this technique is that brain regions use more blood when they are more active. Therefore, we can use the dynamics of blood flow to estimate neural firing. This MR-movie method came to be known functional MRI because it helps decipher the function, or role, of particular brain regions while people are engaged in various activities.

A classic fMRI experiment, for example, is to compare a person’s brain activity while they tap their finger to their brain activity while they’re at rest. Researchers collect a 4D “movie” of MR images while subjects undergo these two activities. Then, they subtract the resting brain activity — which mostly involves “background” processes like respiration and organ control — from the finger-tapping brain activity. On average, the difference in brain activity between the two tasks yields only the brain activity involved in finger-tapping (since all of the background processes subtract out.) This particular experiment would reveal that the motor cortex is highly active during finger-tapping but not during rest. Generally speaking, these types of task-based fMRI paradigms have allowed researchers to gain a much better understanding of the function of different brain regions than ever before.

If you’re wondering, flashier applications of this technology are definitely possible in the future. Acquiring a large amount of fMRI data from participants allows researchers to understand the meaning of complex patterns of activity. Imagine that we observe a pattern of brain activity that only occurs while a participant watches tennis. If we then scan this person with fMRI while they’re sleeping and observe the pattern, we could hypothesize that they were dreaming about tennis. As time goes on, our understanding of even finer grain patterns may improve to the point where we can convincingly reconstruct dreams from brain scans. But if you’re anything like me, it might be best if we kept those private…

Notes:

fMRI is just one of many techniques being used in neuroscience. While it’s popular in human neuroimaging at the moment, other methods like EEG and TMS are also widely used. Animal models afford even better methods since researchers can record neurons more directly; however, there is an obvious gap in cognition between, e.g., rodents and humans.

Finally, fMRI has many limitations, too many of which to list here. We are still at a relatively early stage in our use and application of this technology.

You can follow me on Twitter here. I tweet about psychedelics, neuroscience, and more.

How Does the Brain Alter your Perception of Time?

Have you ever sat in a lecture or meeting that dragged on forever? Perhaps you looked at the clock, tried to distract yourself for a while, then looked at the clock again and only a few minutes had passed. Under different circumstances, you might have experienced the opposite: losing track of time. Maybe you got lost in your favorite hobby or spending time with your best friend, and before you knew it, several hours had flown by. These experiences are common, but scientists are just starting to understand the cognitive and neural mechanisms that underlie them.

Psychologists have long made a distinction between metric time and episodic time: metric time is what a clock measures — it moves forward steadily and without variation; episodic time, on the other hand, refers to our subjective perception of time. Imagine yourself sitting in a boring meeting for an hour; now, compare that to speaking with your best friend for an hour. Although both of these events last the same amount of metric time, there seems to be something real about the difference in how long those experiences feel. This phenomenon has a particularly mysterious quality to it: are we really experiencing the passage of time differently? Or is it just an illusory product of our memories?

A recently study published in Nature may shed light on this issue. The research group led by Stanford neurobiologist, Albert Tsao, believe they may have found the neurobiological correlates of episodic time perception. For context, researchers have historically been able to identify neurons that code metric time: “time cells” in the hippocampus, for example, have been found to fire continuously like a metronome as an animal performs a task (Tsao, 57). Other neurons, such as those in the suprachiasmatic nucleus, serve as biological clocks to regulate our circadian rhythms in accordance with the light/dark cycle. Despite these advancements, Tsao et. al write that “our understanding of how the brain represents…episodic time is still in a nascent stage” (57). His group’s findings, though, may represent a major step in the right direction.

The Tsao-led team investigated a particular group of neurons in the lateral entorhinal cortex (LEC) — a structure that serves as a key point of contact between the hippocampus — the brain’s memory center — and the cerebral cortex. In their 2018 study, the researchers allowed mice to explore a box whose walls alternated in color back-and-forth from black to white every six minutes; there was a two minute period with no colors between each switch. Meanwhile, the researchers recorded the firing rates of the mice’s LEC neurons using brain-implanted microelectrodes.

The first clue that these LEC neurons were time-sensitive is that they displayed descending “ramping activity”; that is, when a trial started and the walls changed color, each neuron fired repeatedly at a high frequency, but this rate steadily decreased as the trial progressed. During the two minutes between trials, these neurons were silent, but when the next trial started again, the ramping pattern resumed. Taken alone, this suggests that LEC neurons function like a countdown timer for the trial periods. But what does this have to do with episodic time? Couldn’t these neurons just be coding metric time?

The researchers addressed this in a second experiment in which mice were given a continuous-alternation task. Specifically, mice were put on a track that had a left turn and then a right turn; the experiment had 40 repetitions or trials. If it were the case that LEC neurons only code metric time, we would expect to see another ramping pattern in this experiment. In other words, the LEC neurons would decrease in firing rate over the course of a run on the track, and be silent during the intertrial periods. The researchers found that LEC activity in the first few trials indeed fit this model: specifically, firing rate was an accurate predictor of trial versus intertrial periods. But after the initial trials, LEC activity decreased across the board, and the neurons appeared to stop coding for time altogether.

The researchers hypothesize that this peculiar result suggests that LEC neurons are not metric time coders but instead “experience coders”. In their words, “temporal information in the LEC arises simply because the animal’s moment-to-moment experience constantly changes, and time can be extracted from this changing flow of experience” (60). In the continuous alternation (turning) task, LEC neurons initially fired due to the task’s novel nature, but once the animals learned what was going on, and their behavior became automatic, LEC activity decreased.

Upon first glance, this portrait of LEC neurons seems to be continuous with most people’s notions of episodic time. Rapidly-changing situations with high levels of “experience” to code for may feel slower than familiar, everyday tasks. On the other hand, next time you’re stuck in a meeting and can’t stop looking at the clock, see if you can’t find some repetitive task to do: things might go by a little quicker … just make sure your boss doesn’t notice!

References:

Tsao et al. (2018) Tsao A, Sugar J, Lu L, Wang C, Knierim JJ, Moser M-B, Moser EI. Integrating time from experience in the lateral entorhinal cortex. Nature. 2018; 561:57–62.

You can follow me on Twitter here. I tweet about psychedelics, neuroscience, and more.

What are the Normative Implications of Joshua Greene’s Reduction of Rationalist Deontology?

Two classes of moral theories are consequentialism and deontology; though they posit distinct mechanisms for how humans make (and should make) moral judgments, we appear to make both characteristic consequentialist judgments and characteristic deontological judgments. For consequentialists, the only criteria for determining whether a given action is “good” or “bad” are the consequences of that action. The intention of the actor and the means of conducting the action are only relevant insofar as they relate to the overall consequences. In contrast, deontologists regard the character of a certain action as more important than its consequences; specifically, an action is either right or wrong as defined by some set of moral rules or duties. Joshua Greene, however, argues that humans do not make characteristic deontological judgments as a result of following a moral ruleset; in reality, our “deontological” judgments are simply an evolved response to particular emotional stimuli. This finding, he suggests, calls into question deontology as a plausible class of normative moral theories. While I agree with Greene’s characterization of descriptive deontological theories, I contend that his conclusions do not preclude the possible existence of a non-descriptive, normative deontological theory of morality.

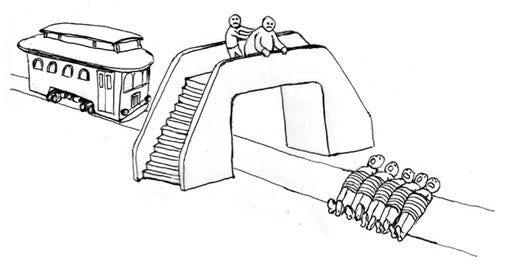

One characteristically consequentialist judgment that the majority of people make pertains to the classic trolley-problem. In this scenario, a trolley is heading down a singular track to which, up ahead, five people are tied. Before the segment of track to which the people are tied, the track forks to a side-track to which only one person is tied. Research subjects are asked whether they would pull a lever to switch the trolley from the track with five people to the track with one person; the majority report that they would pull the lever and save a net of four people. Paradoxically, however, people display characteristic deontological judgments in response to a similar scenario: the footbridge-problem. A trolley is, again, heading down a singular track to which five people are tied. Instead of a secondary track, there exists a footbridge above the original track on which a large woman is standing. By pushing the woman off the bridge and onto the track — killing her in the process — it is possible to save the five on the track. The consequences of pulling the lever in the trolley case and pushing the woman in the footbridge case are equal — one person dies in order to save five lives. Yet, people’s judgments differ: people are willing to pull the lever, but the majority will not push the woman. As Greene notes, many philosophers have attempted to provide normative, deontological explanations to these results such that people’s judgments on the trolley and footbridge cases are justified. For example, it could be that our moral ruleset prohibits killing a person as a means to help someone else. However, Greene asserts that this and other attempted normative explanations have consistently been met with counterexamples, and that no explanation has been wholly satisfactory.

Greene proposes that humans’ tendency to display both characteristic consequentialist and deontological judgments tracks an analogous, psychological dual-process of generating moral judgments. Specifically, he hypothesizes that characteristic deontological judgments arise from rapid, affective intuitions, while consequentialist judgments involve more deliberative, emotionless (or “cognitive”) reasoning. In explaining people’s responses to the footbridge-case, for example, Greene proposes that people make a “deontological” judgment because pushing the person off the bridge provokes a strong emotional response while pulling the lever does not. Consequently, any normative explanation invoking rules or duties that people offer for making the “deontological” judgment will simply be a post-hoc justification of the affective impulse. The judgment, in this sense, is not deontological per se because it’s generated by instinct rather than by adhering to some abstract moral truth.

For us to believe this hypothesis, Greene must provide some objective evidence that 1) people recruit more emotional faculties when making “deontological” judgments than consequentialist judgments, and 2) humans come equipped with, or develop, the specific affective responses that track characteristic “deontological” judgments.

To the first point, Greene uses neuroimaging and reaction time data to suggest a dissociation between the emotional and “cognitive” mechanisms for generating judgments, such that “deontological” judgments recruit the emotional system, and consequentialist judgments recruit the “cognitive” system. For example, when people make close-proximity, emotionally salient moral decisions — such as, deciding whether or not to push someone in the footbridge case — neuroimaging data shows increased activity in several brain structures thought to be involved in affective processing, including the PCC, MPFC, and amygdala. In contrast, in contemplating more impersonal, less emotionally salient moral dilemmas — such as the classic trolley case — imaging data shows increased activation in characteristically “cognitive” neural structures such as the DLPFC and inferior parietal lobe. To further dissociate these two processes, Greene hypothesizes that if they were truly separate, subjects that judge salient moral violations to be okay would take longer to do so than subjects that judge them to be wrong. For instance, in the footbridge case, a subject who decides he would push the woman off the bridge would be “cognitively” overriding his negative emotional response to pushing her. This “cognitive” overriding process, theoretically, would take time. Therefore, if the dual-process model were correct, subjects who would push the woman off the bridge would take longer to respond than subjects who judge the action as wrong — those who simply follow their intuitive emotional responses. The reaction time data, as it turns out, corroborates this hypothesis: positive responses to salient moral dilemmas take significantly longer to produce than negative ones. Taken together, both the neuroimaging and RT data lend credence to the dual-process model in which emotionally salient dilemmas employ a different mechanism of generating judgments than emotionally-neutral scenarios.

In arguing that characteristic deontological judgments are — in their essence — merely post-hoc justifications of intuitive, affective responses, Greene must also show that our set of emotional responses tracks what we normally think of as deontological rules. One classic deontological case is the particular pattern of behavior people exhibit when giving money to charitable causes. From a utilitarian point of view, people have a moral obligation to help people less fortunate than them if it doesn’t incur a significant cost to themselves; yet, wealthy people spend money on luxuries all the time that, theoretically, they could have donated to a charitable cause. Thus, the majority of people do not act like pure consequentialists. In practice, it appears that people decide whom to help based on their relative proximity to the person or cause they are helping. For example, the vast majority of people, if walking by a pond, would not hesitate to save a child who was drowning in the pond. However, many of these same people choose every day not to donate to charitable causes that save the lives of starving children. The deontologist will be hard-pressed to ascribe this behavior to some set of moral rules that encourages helping some people but not others. On the other hand, this scenario fits the emotional-salience model neatly: seeing a child drowning in front of us causes an affective response that drives us to help the child. When donating to a charitable cause, the targets of our donations are often nameless and faceless, so their suffering provokes less of an emotional response, and we are less likely to donate. In both the trolley/footbridge and pond/charity pairings, we see that the scenarios that drive emotional responses and thus provoke “deontological” judgments are the ones in which the subject’s relative proximity to the person being harmed is close. In other words, moral dilemmas that are “up close and personal” (16) tend to recruit the affective system of judgment.

The finding that degree of proximity affects which system of moral judgment we use calls for explanation; Greene proposes an evolutionary account. In humans’ original adaptive environment, our intuitive responses developed in a way to behave exclusively in “up close and personal” situations. Our primate and hunter-gatherer ancestors never had the opportunity to donate money to a charity that helped a child on the other side of the world, so it follows that these types of abstract scenarios do not trigger the emotional response of an analogous “up close and personal” dilemma. In this sense, our intuitive responses can be thought of as an adaptive behavioral heuristic.

Proximity is not the only variable that can be manipulated to track how and when we make intuitive judgments. Another arena where Greene’s line of thinking can be applied is in regards to theories of punishment. A consequentialist would only favor punishments that maximize good outcomes in the future; for them, punitive action is only justified if it will lead to, for example, a deterrent effect, or the containment of a harmful criminal. For deontologists, on the other hand, punishment can be justified by and of itself — it need not produce any particular outcomes. The deontological conception of punishment involves giving criminals “what they deserve” in a retributive sense, bringing to mind the saying “the punishment should fit the crime”. Greene cites a handful of studies that suggest that while people claim to take into account both consequentialist and deontological reasons for conducting punishment, they — in practice — seem to largely ignore the consequentialist reasons. In a scenario in which subjects deemed whether it was necessary to fine a company that had committed a crime — given that fining the company would lead to overall harmful consequences — the majority of subjects decided that it was still right to impose the fine. In fact, in a range of other studies, subjects appeared to not take into account the consequences of punitive action — for example deterrent effect — and instead exhibited retributivist behavior. Since it appears that people do not punish in a typical consequentialist fashion, this raises the question: is there some variable that — like the previously observed “up close and personal” effect — tracks how people make punitive judgments? Greene cites two studies that converge on “moral outrage” as an accurate predictor of punitive judgment; if a subject is outraged by a particular transgression — that is, they have a strong, negative emotional reaction to it — the subject will punish the perpetrator more severely. This is useful in that it suggests our punitive judgments are largely the result of Haidtian affective intuitions. But simply identifying “moral outrage” does not tell the full story: what determines what people find outrageous? Is there an evolutionary explanation like there was for the trolley/footbridge phenomenon?

Greene conjectures that our moral outrage derives from an adaptive heuristic to punish non-cooperators. He describes a study in which two players play a simple game to determine how to split a certain sum of money, for example, $10. The first player is given the power to propose an offer to the second player for how to split the $10, and the second player can either accept or reject the offer. Empirically, it appears that second players typically agree to fair, or near-fair, splits like $5 and $5, and $6 and $4. However, second players, on the whole, reject “unfair” offers like $8 and $2; this is a puzzling result because they willingly forfeit free money simply to punish greedy first players. During the game, brain regions involved in emotion were found to be more active in second players after receiving unfair offers as compared to after fair offers. So again, it appears that people make non-consequentialist, “deontological” judgments based on emotional responses. In addition, argues Greene, this affective response too has an evolutionary basis. In our original adaptive environments, it was important to quickly punish non-cooperators to ensure the success of the group; specifically, “the emotions that drive us to punish wrong-doers evolved as an efficient mechanism for stabilizing cooperation” (45).

Greene’s work of reduction is powerful and convincing. As he points out, in considering whether our characteristic deontological judgments arise from an adherence to an unknown, complex set of moral rules, or from a set of adaptively-formed intuitions, the parsimonious choice is clearly the latter. Given this finding, it is important to consider its descriptive and normative implications.

Consequentialism, as a descriptive theory, does not seem plausible: considering the evidence from the footbridge problem, and people’s charitable and punitive behavior, it is clear that people do not act like pure consequentialists. Similarly, Greene makes a persuasive case that there is no particular moral ruleset we follow, but rather we act in accordance with our intuitions in many situations. Thus, rationalist deontology cannot function as a fitting descriptive theory. In all likelihood, an apt descriptive moral theory will take into account the interplay between the emotional and “cognitive” systems we use to make judgments.

The aim of Greene’s paper is not specifically to endorse consequentialism as a normative moral theory, but much of his evidence seems to, at the least, cast the consequentialist judgment as the favorable of the two options. Whereas deontological judgments are often “irrational” impulses, consequentialism relies on deliberative “cognition” — one of the defining features of humankind that separates us from other animals. Consequentialism involves notions of fairness and justice that deontological judgments seem to lack. A common misconception of consequentialism, mentions Greene, is that it does not respect the rights of individuals like deontology does. This, however, is mistaken; on the contrary, consequentialists treat the value of each person equally. Despite our uncomfortableness, for example, in pushing the woman in the footbridge case, it may be that it is necessary for the “greater good” if we are to treat each person equally and with respect. Adopting consequentialism does, though, involve some difficulties. For example, how are we able to reconcile our charitable decisions? A consequentialist would be obligated to donate most of their disposable income to people less fortunate than them — a behavior that while possible, would be uncomfortable for most people. Uncomfortability, though, is not enough in itself, and should be an expected consequence of a good normative theory. Another downfall, though, is that adopting the consequentialist worldview implicates losing a sense of the “personal”: a consequentialist mother could not care “more” for her child than for a stranger, for example. As Greene notes, this line of thinking creates a slippery slope. Theoretically, a consequentialist should even spend his time in a particular way; if listening to music or getting drinks with friends serves no functional purpose, shouldn’t he instead be obligated to spend all his free time volunteering? In this sense, there are arguments that “strong” consequentialism could be impractical as a normative moral theory. Greene makes a strong case that we would be better served to check our emotional intuitions with rational deliberation in many instances, and I agree with him on that point. I am not ready, at least from Greene’s arguments, to make any conclusions about the normative power of consequentialism.

Greene’s principal point, however, is that his empirical findings on the nature of our “deontological” judgments serve to discredit deontology as a feasible normative moral theory. It is clear that rationalist deontology is not an apt class of descriptive theories because in reality, we act in accordance with our adaptive intuitions and not a moral ruleset. But what bearing does this have on its normative plausibility? Because our intuitions are merely “blunt biological instruments,” that have a “simple and efficient design,” they do not always lead to positive or rational outcomes (46). This is clear in the footbridge case and in our punitive judgments. However, claiming that our intuitions lack normative power and therefore every deontological theory lacks normative power is to falsely equate the two. Greene argues that all deontology really is, is a rationalization of our intuitions. Similarly, he argues, deontology, while hard to define, is, in all respects, about “giv(ing) voice to powerful moral emotions” (50). I agree with Greene that in both a Kantian and descriptive sense, deontology is a rationalization of our moral emotions, and it is not apt as a normative theory. Indeed, any theory that tracks our moral intuitions will not have normative power. However, I do not grant that a deontological moral theory necessarily needs to track these adaptive intuitions. Even though theories like Kant’s attempt to justify our intuitions, other theories don’t necessarily have to. In other words, there could exist some deontological theory invoking specific rights and duties that — while it does not function as a valid descriptive theory — could serve as a normative theory. Greene has done a sufficient job of discrediting classical deontological theories that attempt to justify our observed behavior; but, I contend that he has far from proven the impossibility of some deontological theory having normative power.

Urban Exploration

Contact

If you want to reach me, check out all these options for doing that: